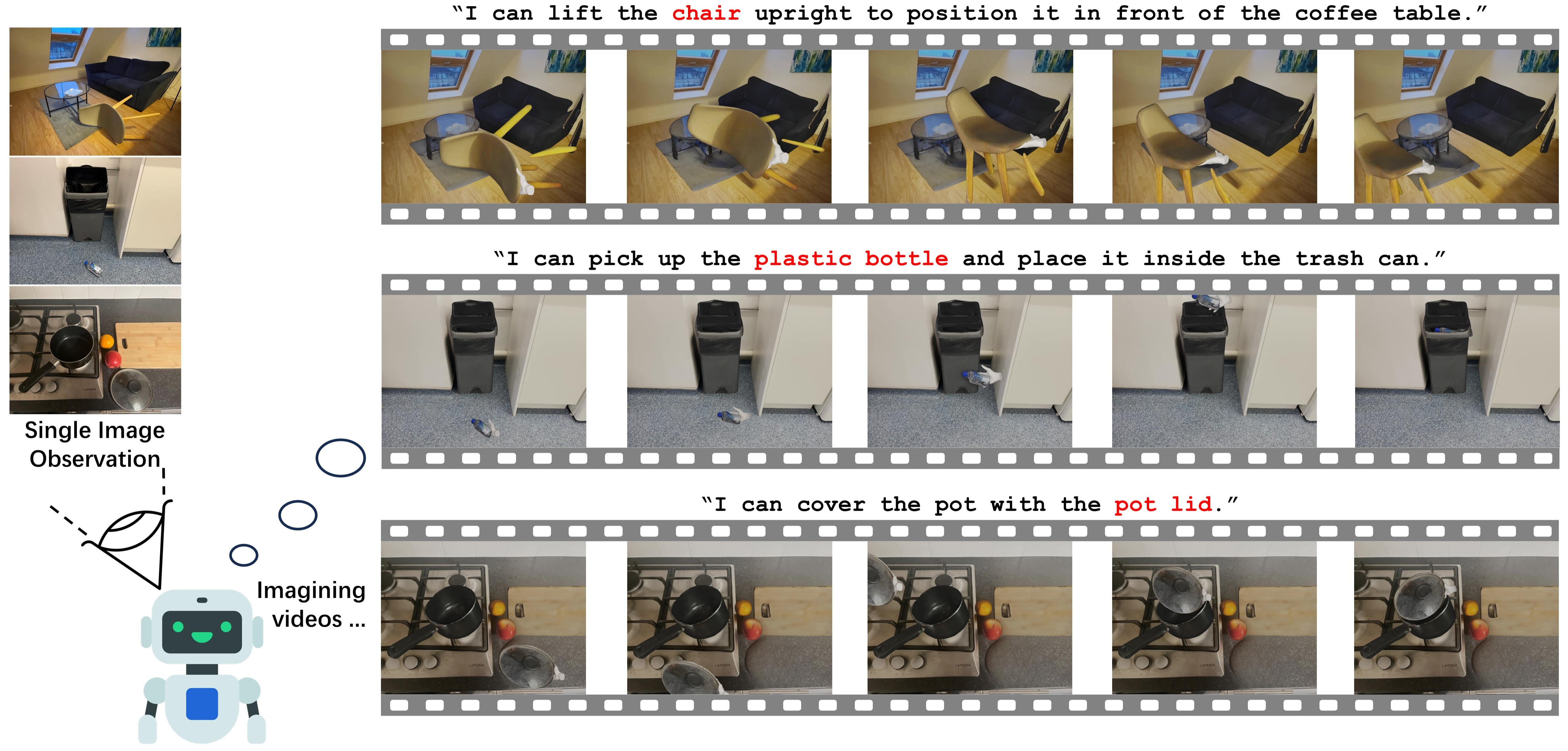

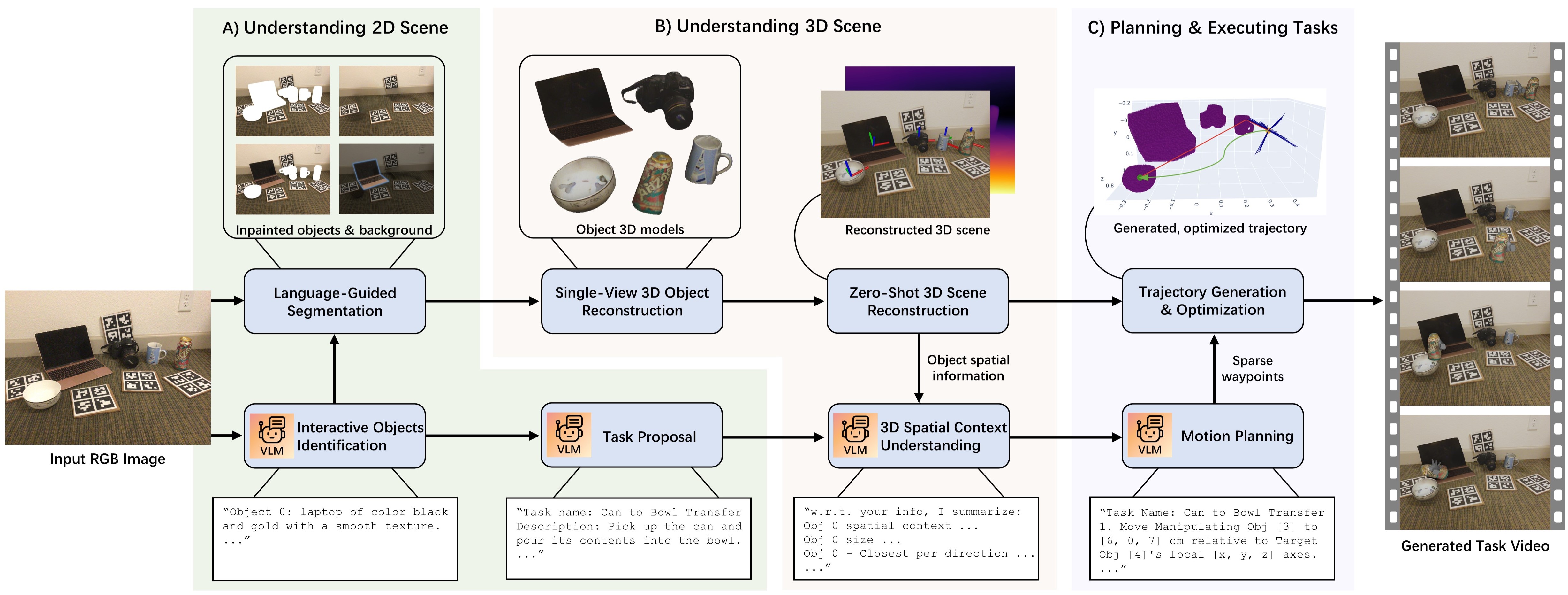

Humans can not only recognize and understand the world in its current state but also envision future scenarios that extend beyond immediate perception. To resemble this profound human capacity, we introduce zero-shot task hallucination—given a single RGB image of any scene comprising unknown environments and objects, our model can identify potential tasks and imagine their execution in a vivid narrative, realized as a video. We develop a modular pipeline that progressively enhances scene decomposition, comprehension, and reconstruction, incorporating VLM for dynamic interaction and 3D motion planning for object trajectories. Our model can discover diverse tasks, with the generated task videos demonstrating realistic and compelling visual outcomes that are understandable by both machines and humans.

We develop a modular pipeline that progressively enhances scene decomposition, comprehension, and reconstruction, incorporating Vision-Language Model (VLM) for dynamic interaction and 3D motion planning for object trajectories, producing geometric-aware task videos. To understand the image scene, we use VLM to identify interactive objects and propose context-dependent tasks in a role-play manner, complemented by language-guided segmentation and repainting models to obtain occlusion-free object masks. Elevating 2D understanding to 3D, we use depth estimation and single-view 3D reconstruction models to generate a semi-reconstructed 3D scene, with the full 3D representation of foreground objects and the background as a plane. With the reconstructed 3D scene, we introduce a novel axes-constrained 3D planning approach that enables VLM to plan the motion of objects for given tasks by specifying waypoints. Through the combination of traditional path planning algorithms, our model generates complete, feasible, and natural trajectories from merely a single image observation. With the entire framework being fully modularized, each component can be easily replaced with the latest improvements within its specific domain.

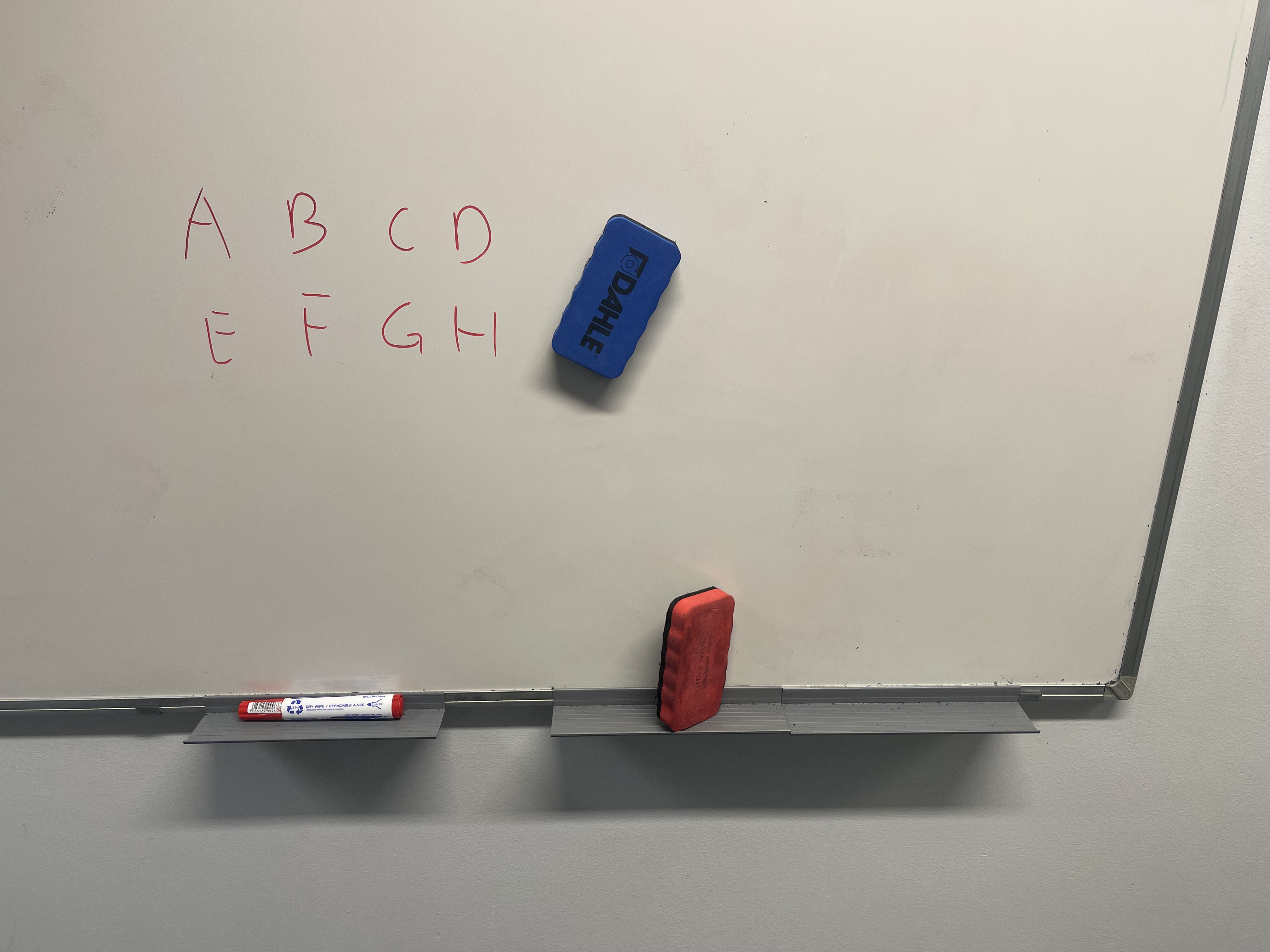

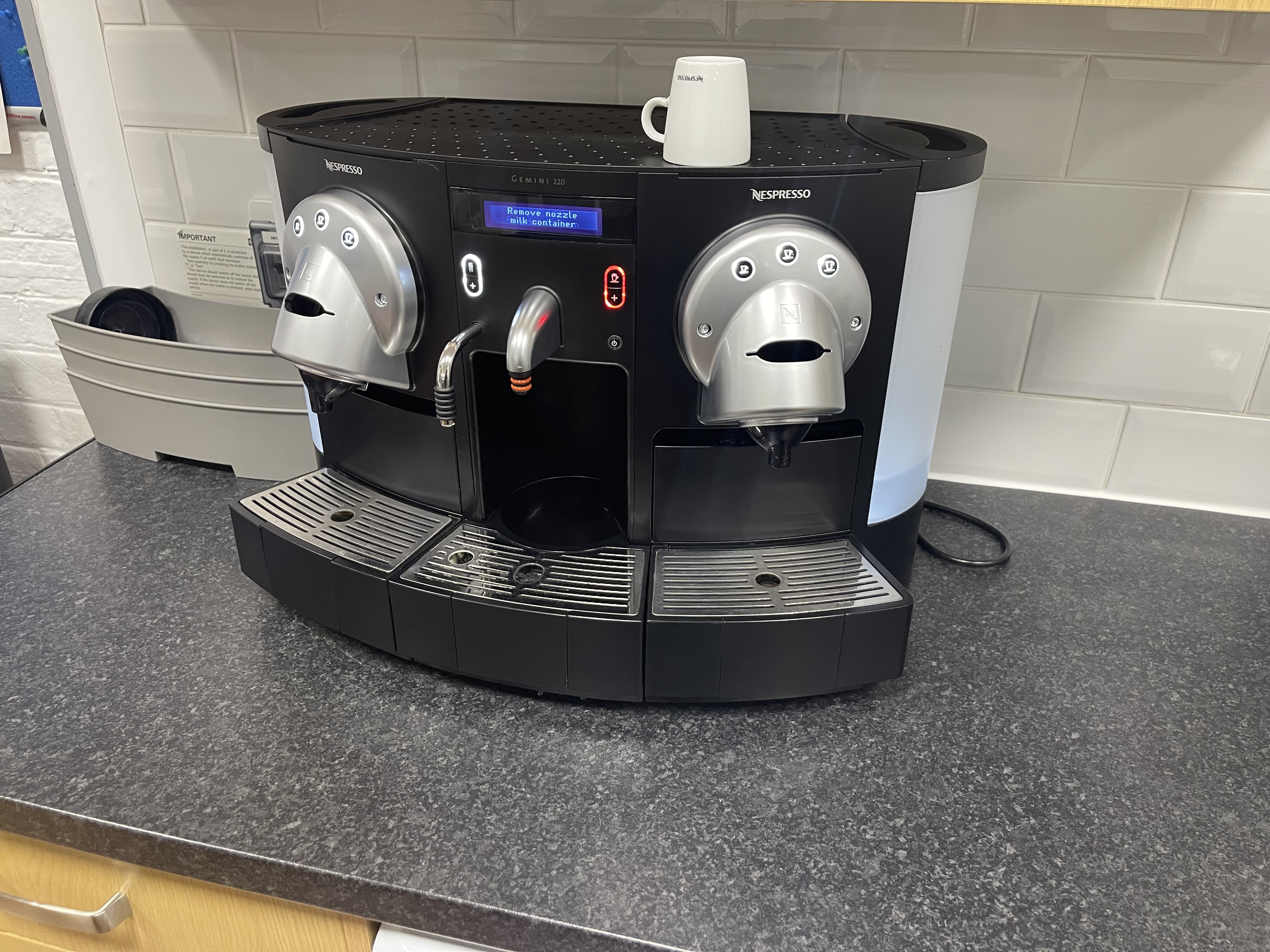

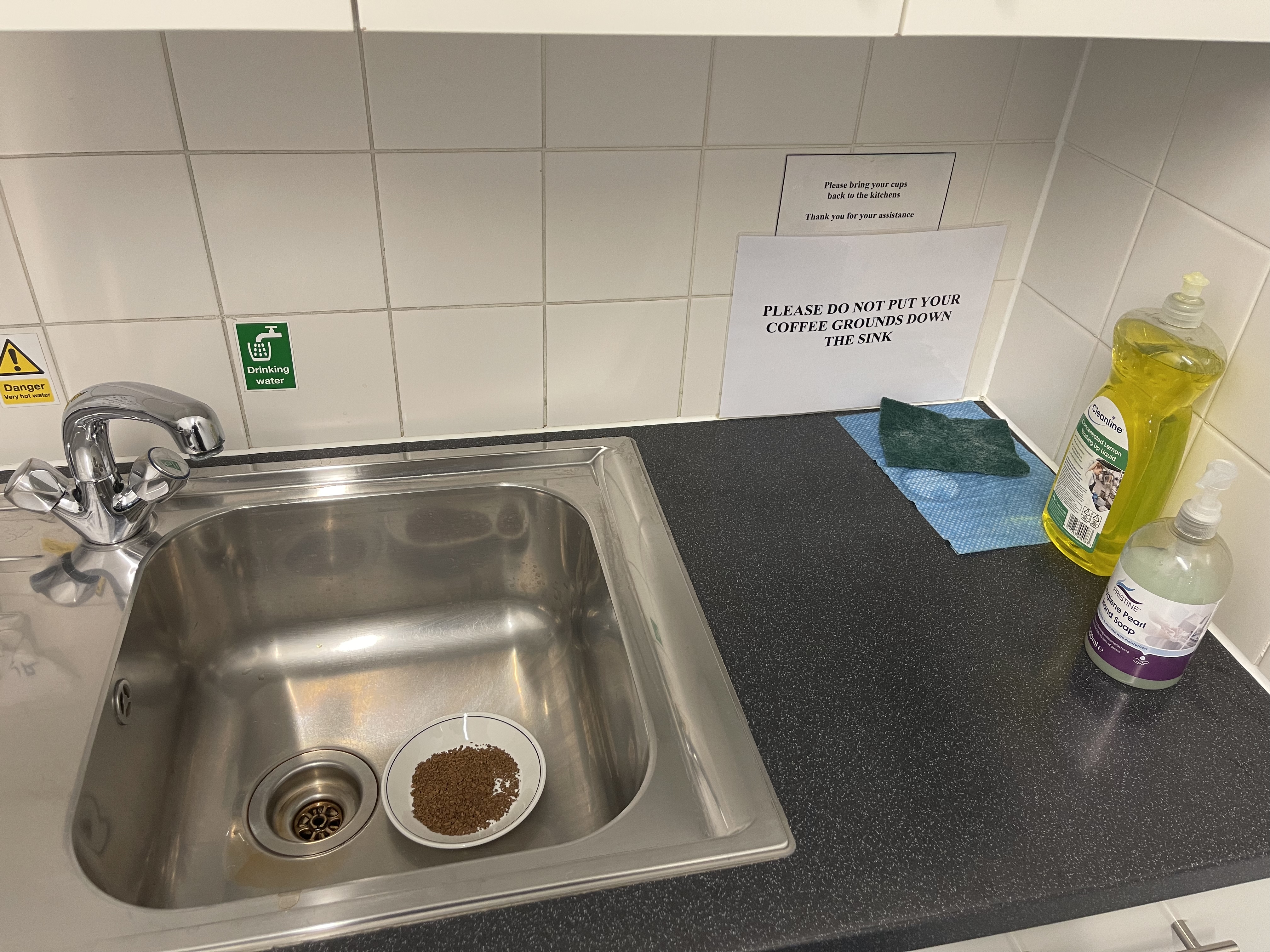

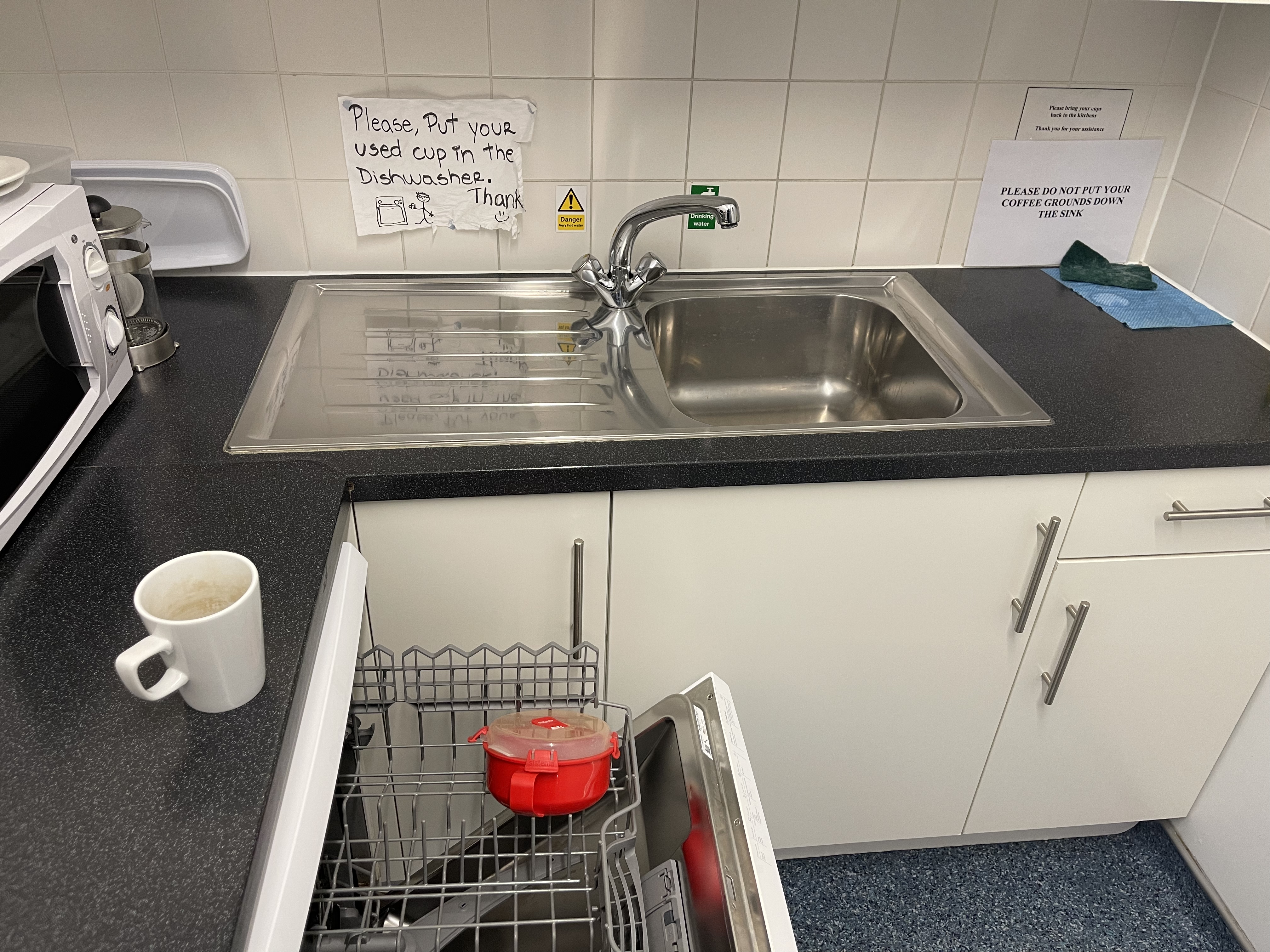

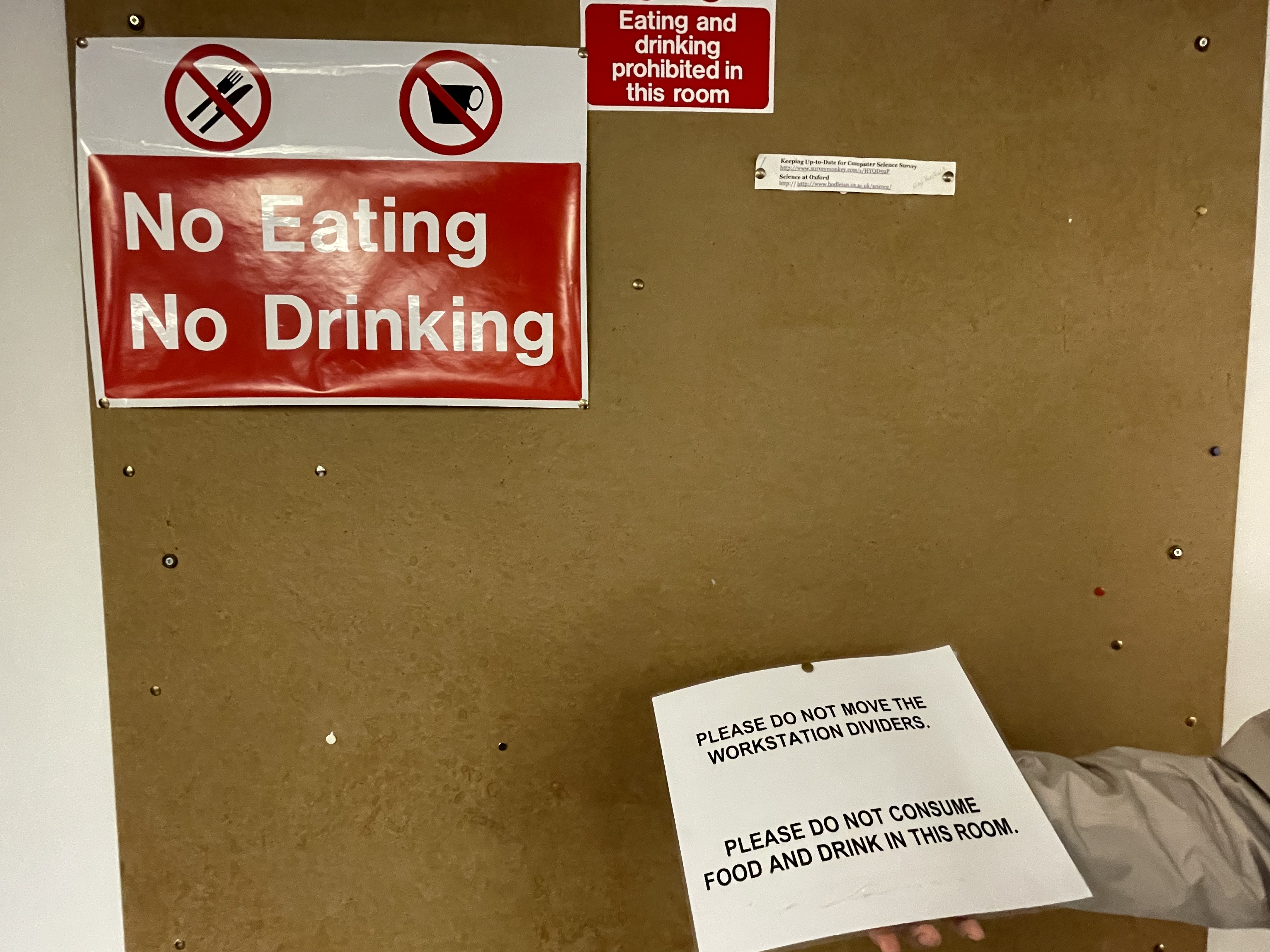

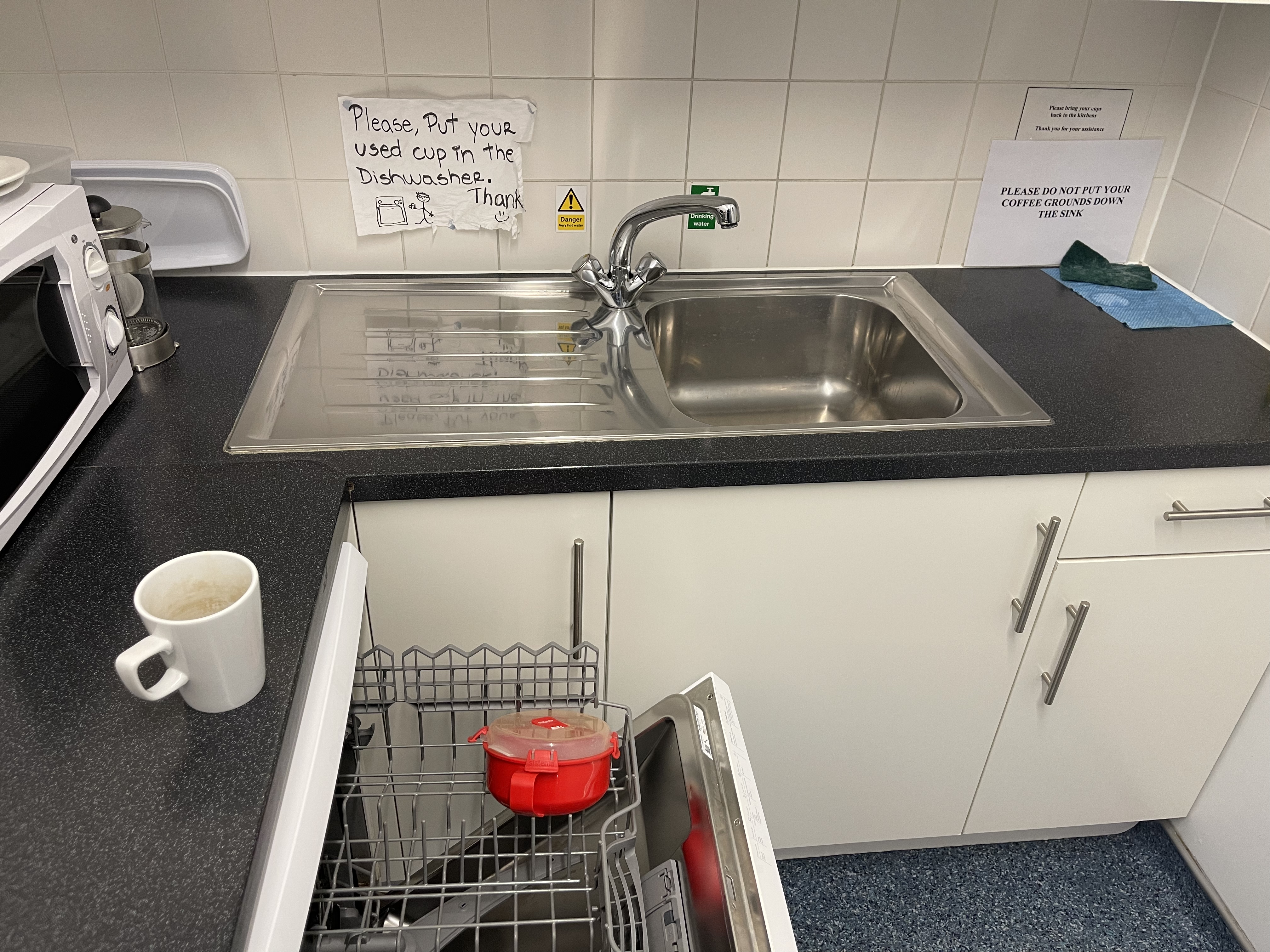

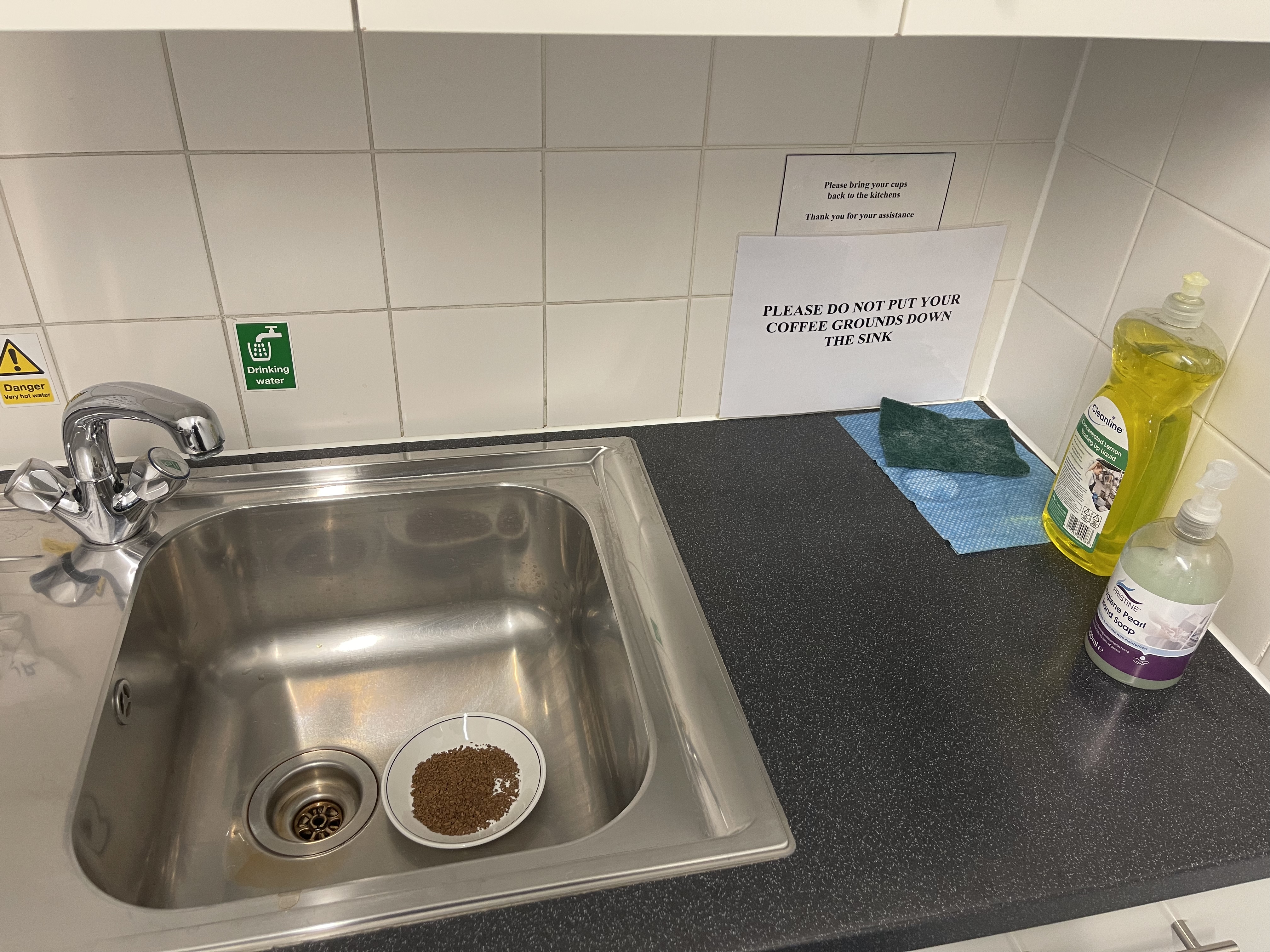

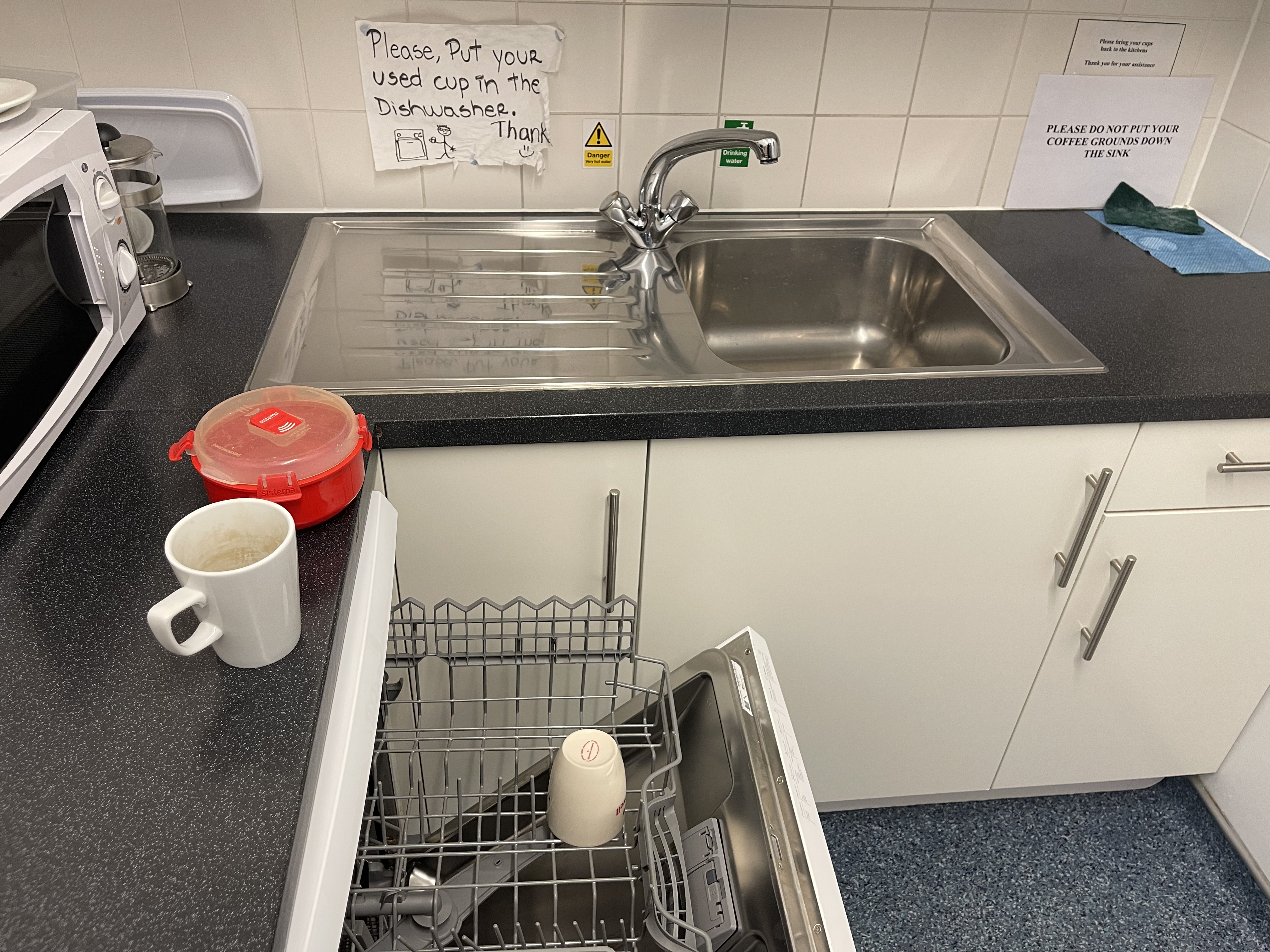

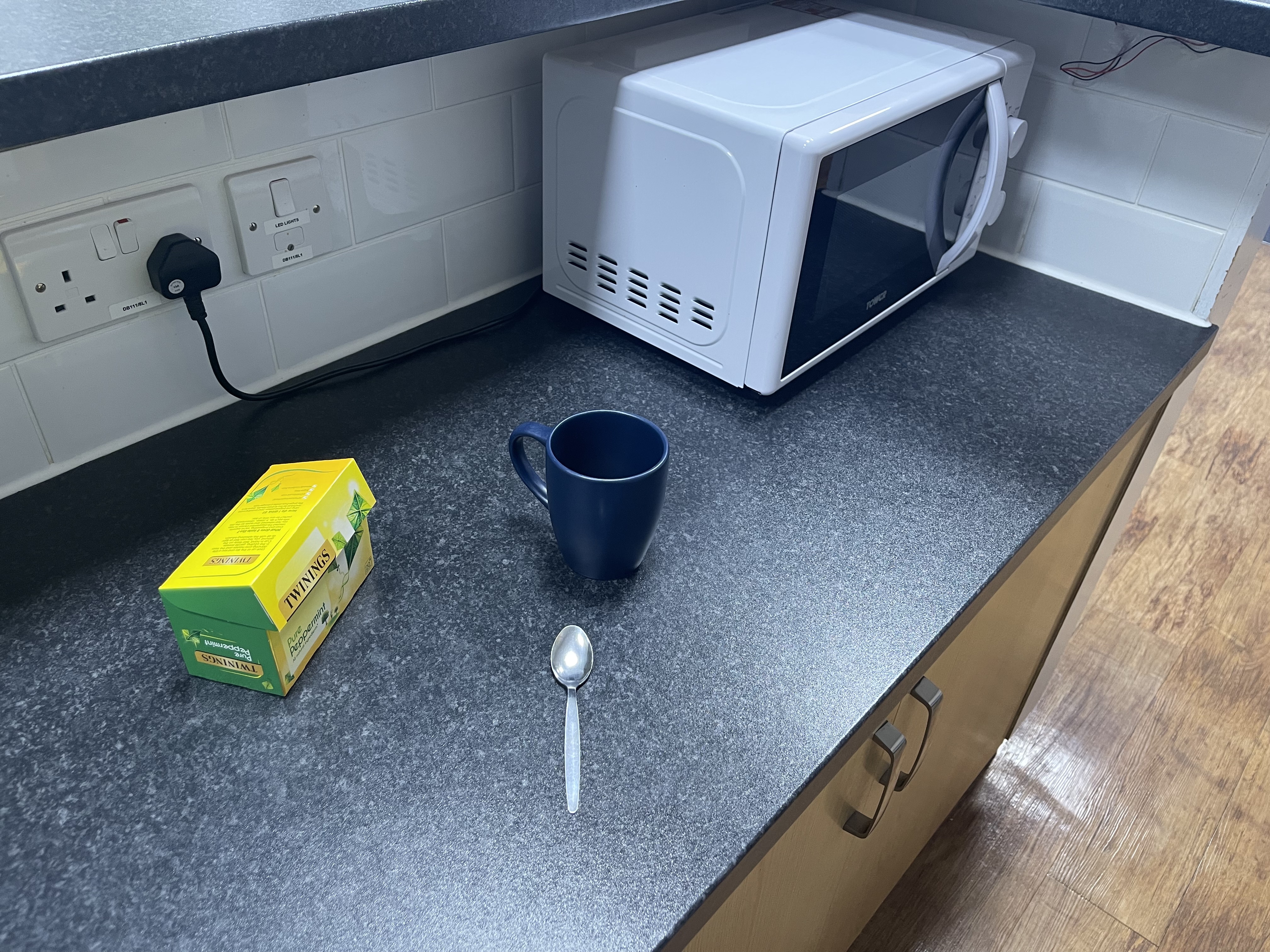

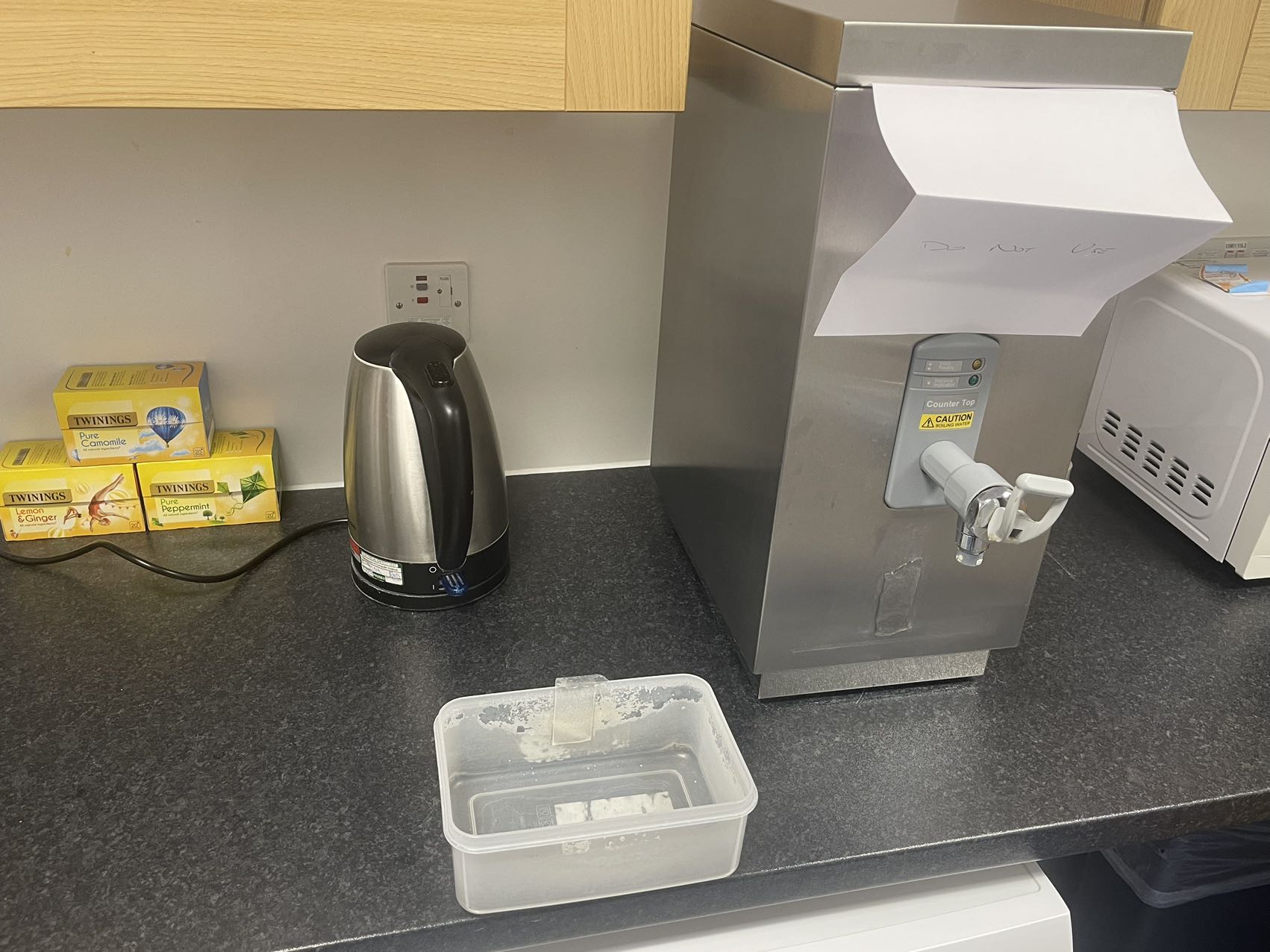

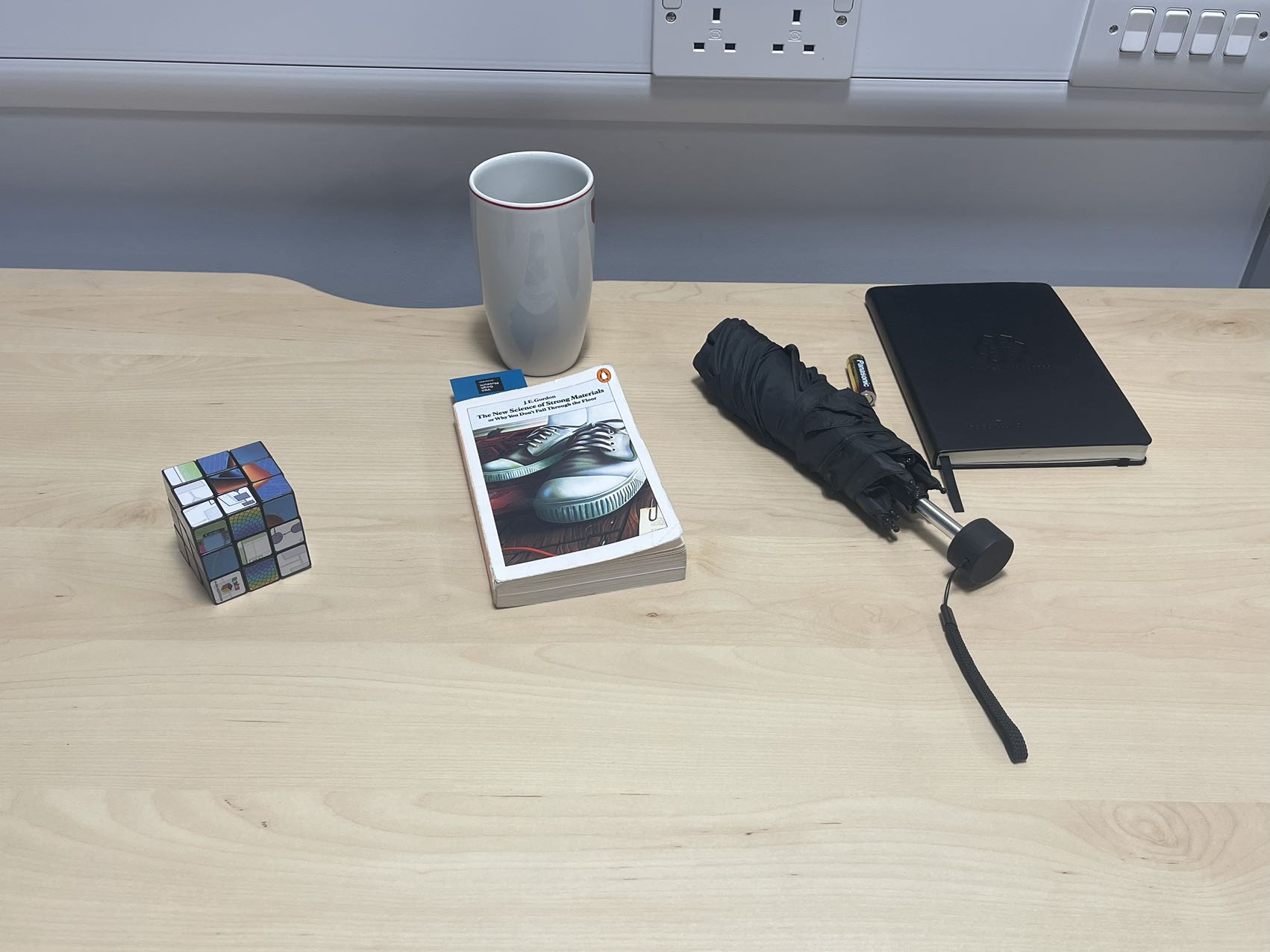

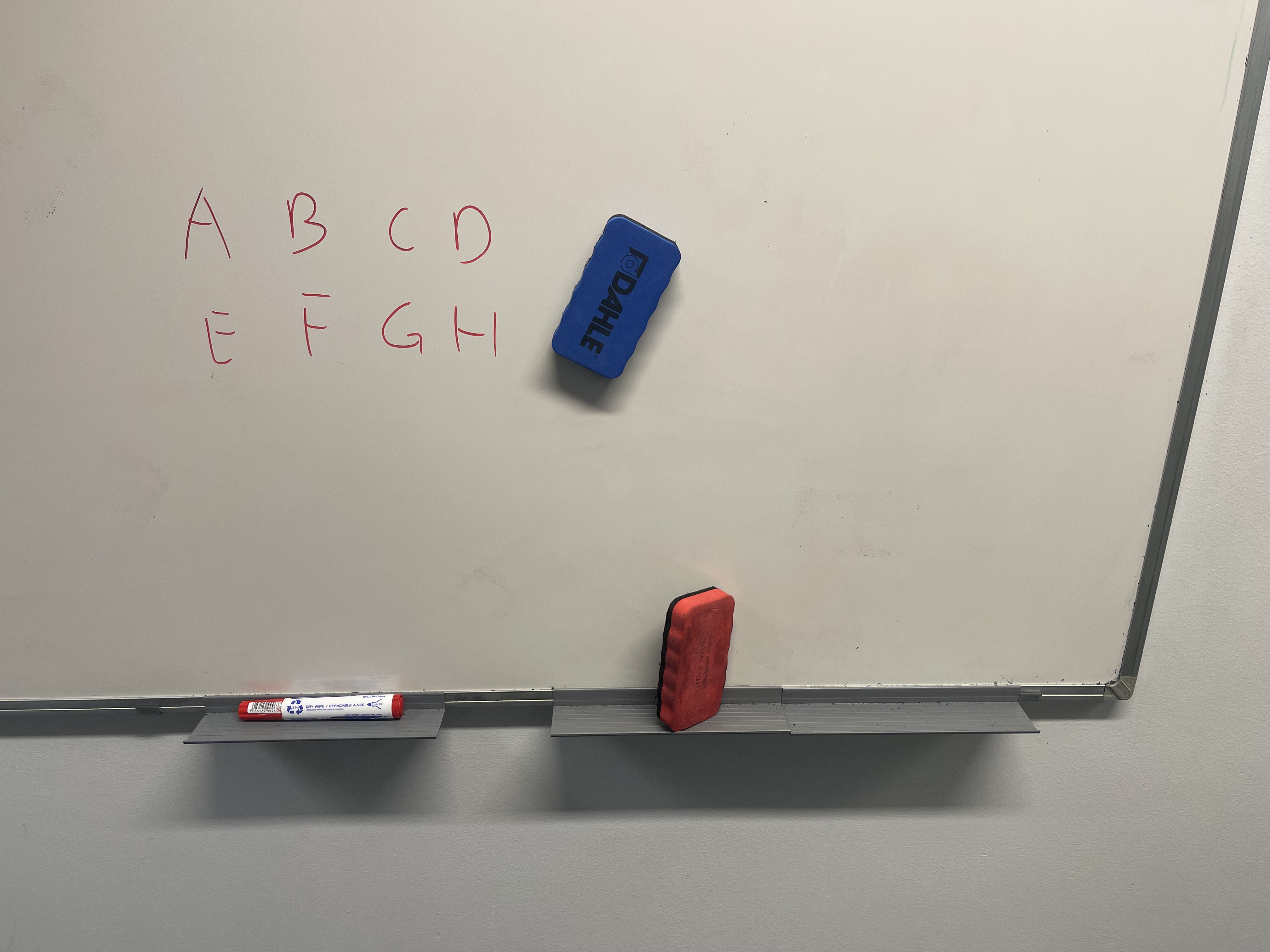

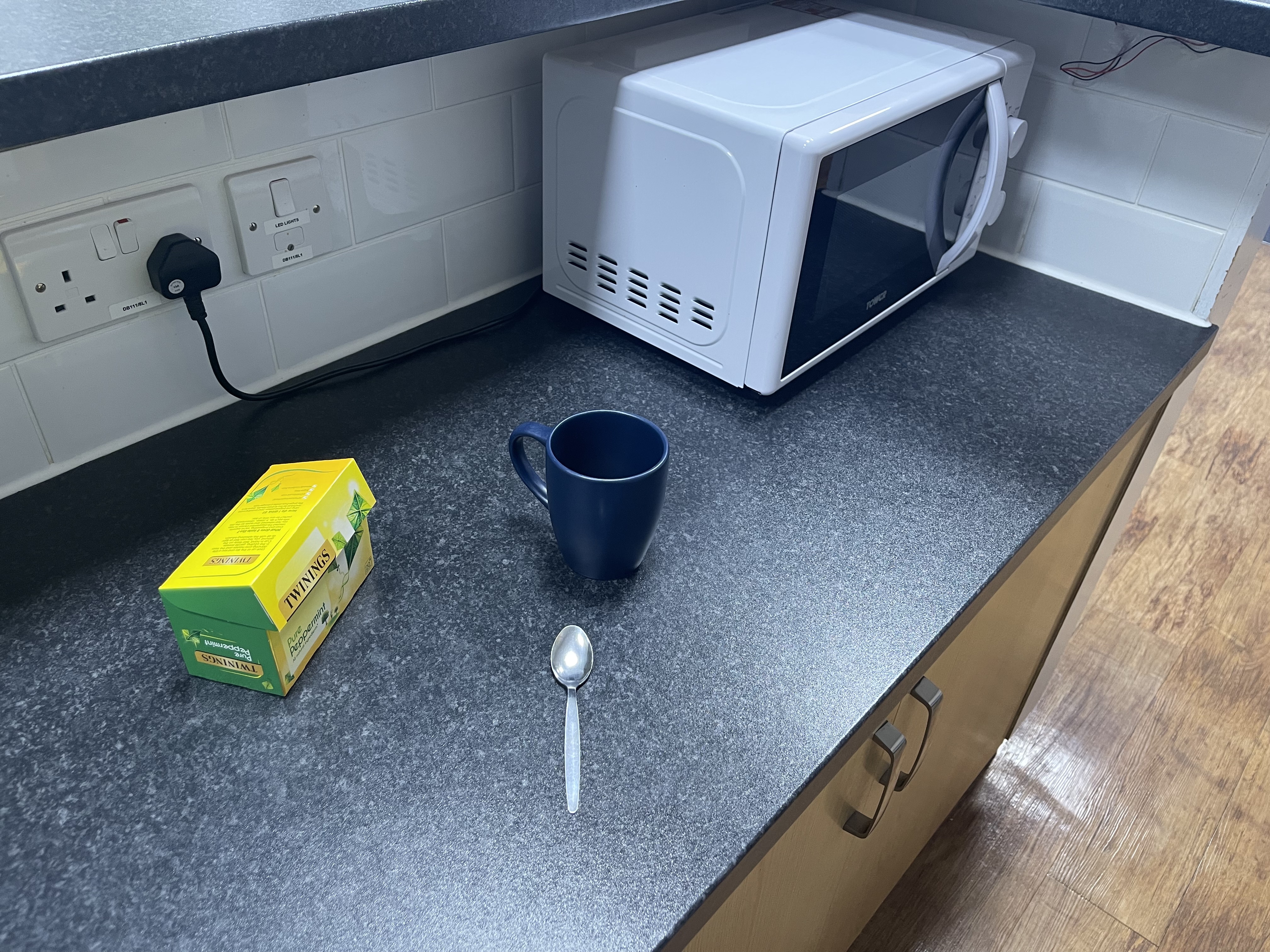

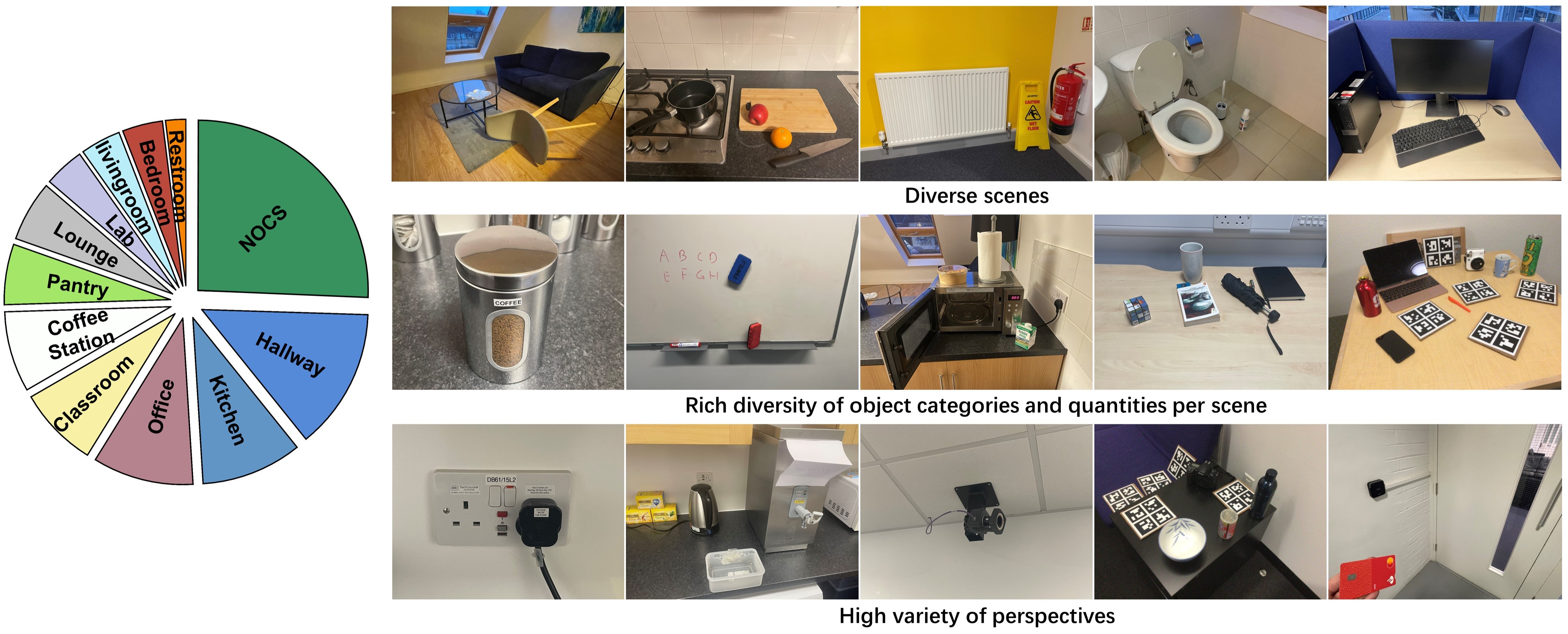

As we are the first to propose zero-shot task hallucination, there lacks a pre-existing dataset for evaluation. Therefore, we craft a diverse evaluation dataset by combining self-captured photos and scenes from the NOCS dataset. We capture 38 photos using an iPhone 12 Pro Max. From NOCS, we use its real-world part of both the training and test sets, which encompasses 13 distinct scenes. Our dataset covers diverse scenes (e.g., office, kitchen, bathroom), and features a rich diversity of object categories (116) and quantities (185), with each image containing 1 - 7 objects and 1 - 3 tasks proposed for each object (278 tasks/task videos/planned trajectories in total). The dataset's diversity is further enhanced by the variety of perspectives from which the images are captured or selected (e.g., frontal, top-down, side views).

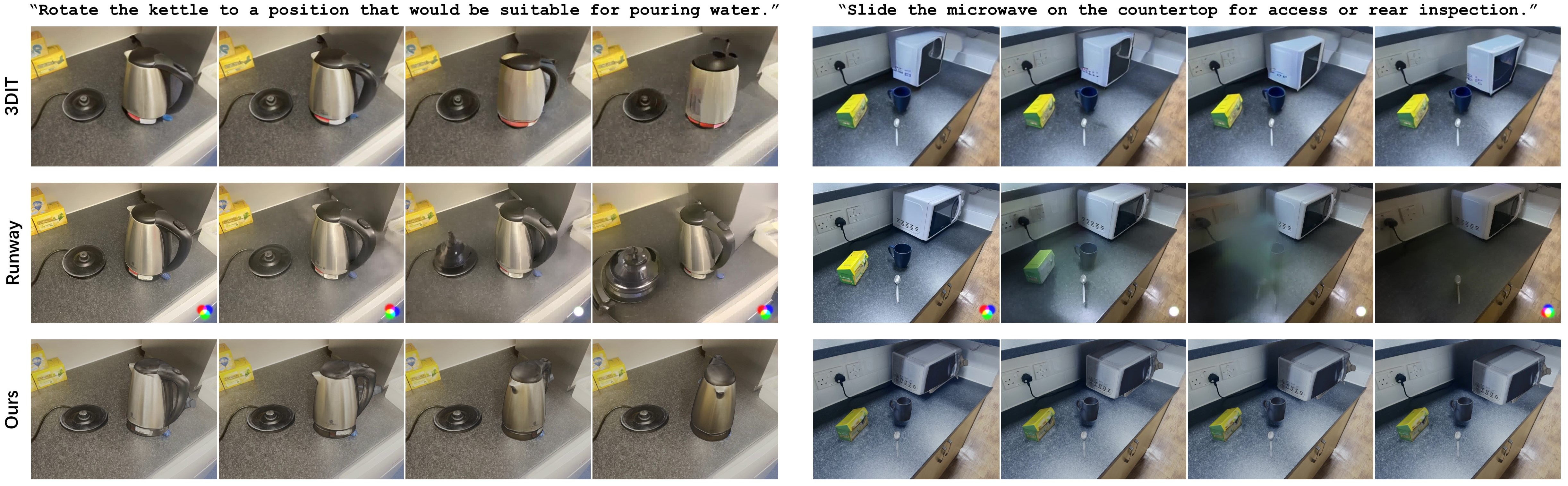

We compare with state-of-the-art 3D-aware image editing and video generation models, 3DIT and Runway Gen-2. We limit comparisons to rotation and translation tasks to avoid bias against 3DIT and Runway in more nuanced context-dependent tasks (e.g., slicing an apple), where their performances fall short. For 3DIT, we generate and connect frames with our planned trajectories. For Runway, we prompt it with task descriptions.